Exploring NATS as a backend for k3s

Byron Ruth — May 9, 2023

k3s is a lightweight Kubernetes distribution suitable for IoT and edge computing environments. One component k3s leverages is KINE , which is a shim enabling the replacement of etcd with alternate storage backends originally targeting relational databases.

In April 2022, the v0.9.0 release of KINE introduced native support for NATS as a backend. In June 2022, the k3s v1.23.7+k3s1 release included this KINE version making it possible for k3s deployments to connect to an existing NATS system.

The KINE backend leverages the Key-Value API built on top of the NATS persistence subsystem called JetStream .

A minimal example of bootstrapping a k3s server backed by NATS can be done by starting a JetStream-enable nats-server followed by starting k3s server with the --datastore-endpoint configured.

# Run in the background or in the foreground in a different shell.

nats-server -js &

# Point k3s to the default NATS address.

k3s server --datastore-endpoint=jetstream://localhost:4222

When the k3s server starts, it will create a KV bucket (if it does not exist) within NATS. If using with a NATS cluster, the bucket can be configured with multiple replicas for high-availability and fault tolerance of the data.

Global, multi-tenant infrastructure

What does using NATS give you out of the box?

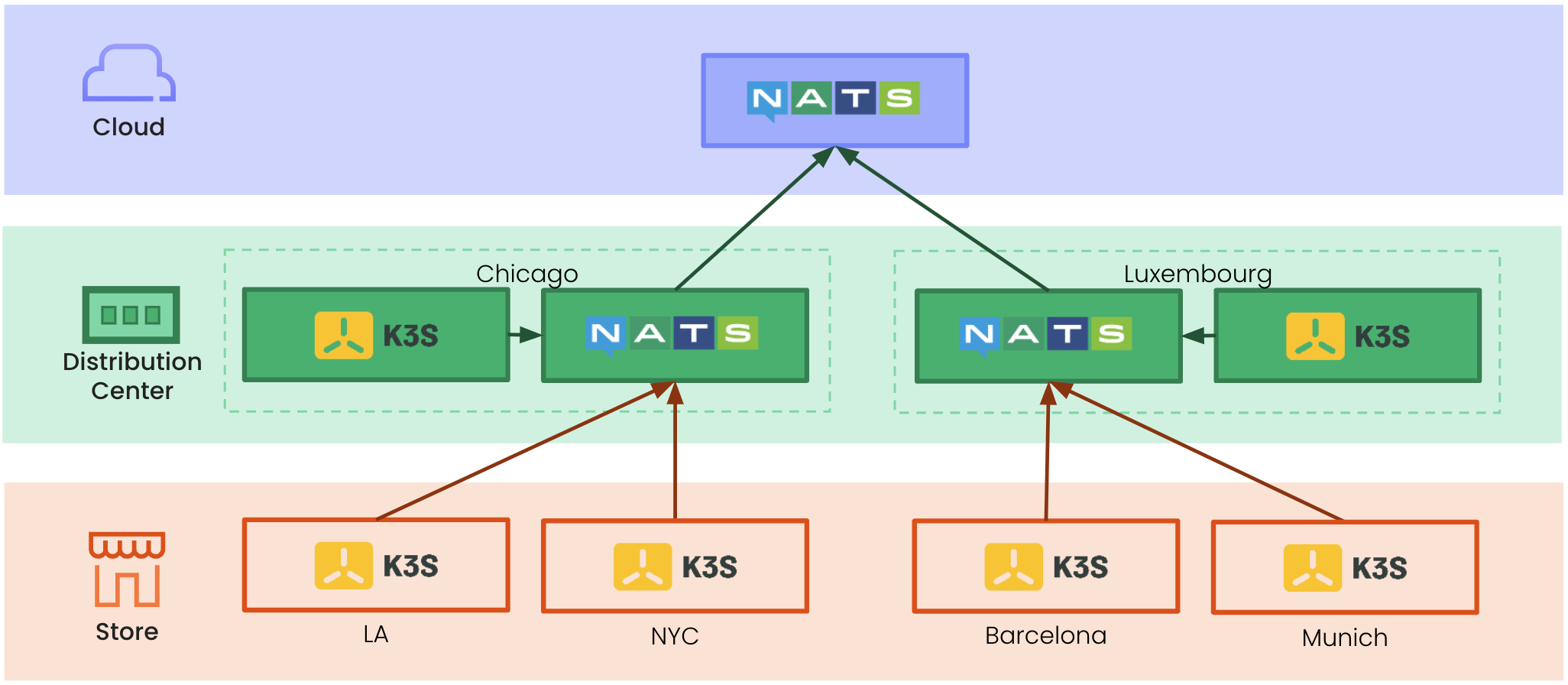

During RethinkConn 2022, Caleb Lloyd presented Using NATS JetStream as a KINE backend for k3s where he demonstrated the ability to deploy multiple k3s clusters in different regions all connected to a global, multi-cloud deployment of NATS (in this case Synadia’s NGS ).

The main takeaways:

- Each k3s cluster can have their own isolated account and KV bucket to store cluster state on a shared NATS system.

- Applications running in k3s can leverage the same NATS system for their workloads (within their own accounts) as what k3s does.

- Cross-region mirrors of the KV buckets can be created for straightforward backup/restore disaster recovery scenarios.

Given this is one NATS system, an operator gets end-to-end visibility and management of all of these assets out-of-the-box. Check out the k3s-on-nats demo repo to try it out youself.

Embedded NATS

Last week (May 2023), the v0.10.0 KINE release landed support for embedding the NATS server. This is possible because KINE and NATS are both written in Go and the server can be imported as a package .

At the time of this writing, k3s with this new version of KINE embedded with NATS has not yet been released. What is discussed below works, but currently requires a manually building k3s.

Starting k3s with this version of KINE embedded is now reduced to the following command (without needing to start an external NATS server).

k3s server --datastore-endpoint=nats://

See a one minute demo of how it works.

The minimal nats:// endpoint relies on the defaults in the NATS server, listening on all hosts (0.0.0.0) and binding to port 4222. Of course, if you don’t want to embed the server, you can add the query parameter noEmbed and it will connect to a remote system.

What do we gain with an embedded server?

The original motivation was to further reduce the distribution and dependency footprint, resulting in the option of a single k3s binary with KINE and NATS embedded. In addition to the reduced dependency footprint, there are multiple benefits over etcd and SQLite:

- The

nats://endpoint supports aserverConfigquery param pointing to a local NATS config file. This gives the flexibility of configuring the instance as a Leaf Node , which enables the secure extension of an existing NATS system. Leaf nodes initiate connections to the remote servers, making them friendly for network environments with heavy use of network address translation (NAT). - Building on the above example showcased in the RethinkConn talk, embedded NATS makes it possible to extend to the edge and maintain local state if remote connectivity to a NATS cluster is lost, without sacrificing visibility or management capabilities.

- A single binary provides all of the advantages of NATS combined with a workload scheduler. As a result, your data lives on and traverses one system, unifying design, development and operations.

Having a single binary with k3s and NATS is superpower!

If you are interested in reading what options are available to configure the KINE datastore endpoint, checkout the NATS examples document in the KINE repo.

Future efforts

Having NATS available as KINE/k3s backend was a great start and having the option for embedding NATS is a superpower for edge deployments. However, there are a few other areas the NATS team is exploring including:

- Native support for NATS in k3s' embedded HA/cluster mode ( join the discussion on the GitHub issue )

- How NATS may be able to be leveraged within Fleet

- What would it take to setup an agent-less k3s mirror of another instance, leveraging NATS mirror/sourcing capability

If you find any of this interesting, have use cases to share, or want to show your support, please join us on Slack !

Finally, The NATS team wants to give a BIG thank you and shoutout to the k3s/KINE team for the support and guidance on making NATS available as a backend. ❤️

Additional Links

About the Author

Byron Ruth is the Director of Developer Relations at Synadia and a long-time NATS user.

Back to Blog